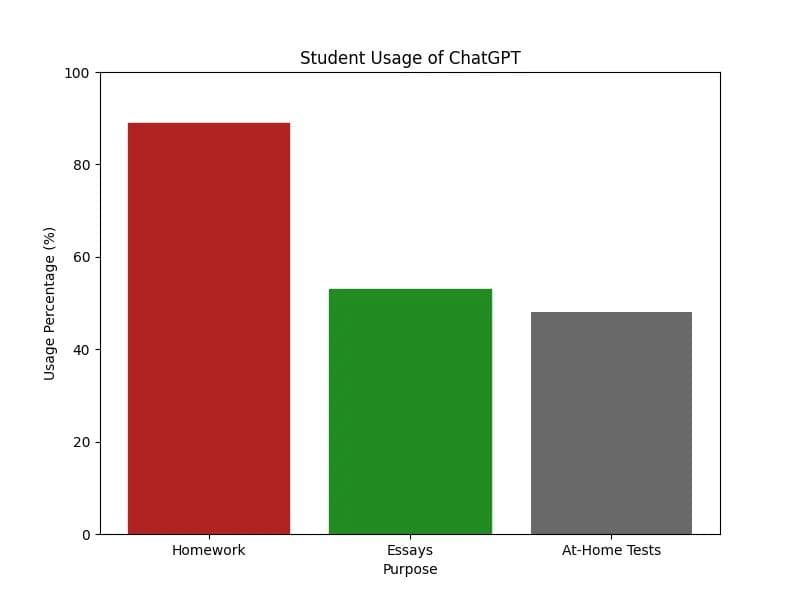

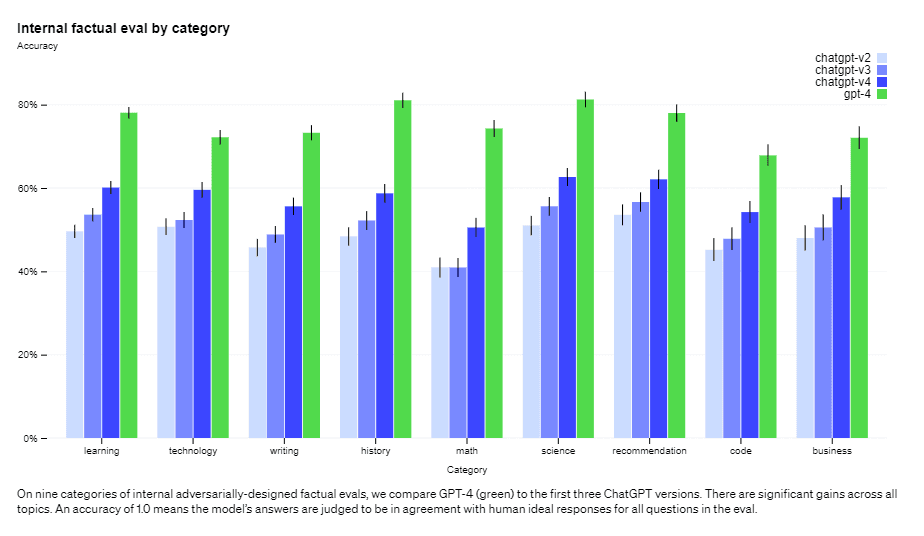

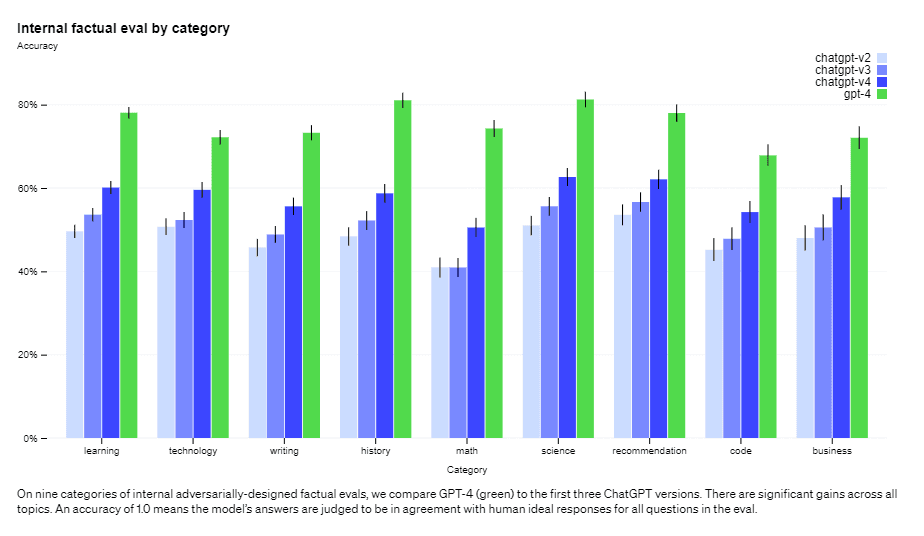

The first step to fact-checking your AI writing is to actually read it. I wish I were kidding, but with generative AI being so quick to create essays and articles, some people just hit send without even knowing what they’ve submitted.If you want to make sure you don’t get accused of plagiarism, you will need to carefully read what your AI tool writes for you and research every point it makes because AI models are prone to including misinformation so long as it suits the article or essay.In this guide, I’m going to show you the steps you need to take to ensure your content doesn’t land you in front of a disciplinary committee. As you can see in this evaltuation measuring the improvements in accuracy across updated GPTs, as the technology improves, AI hallucinations are becoming less prevalent.

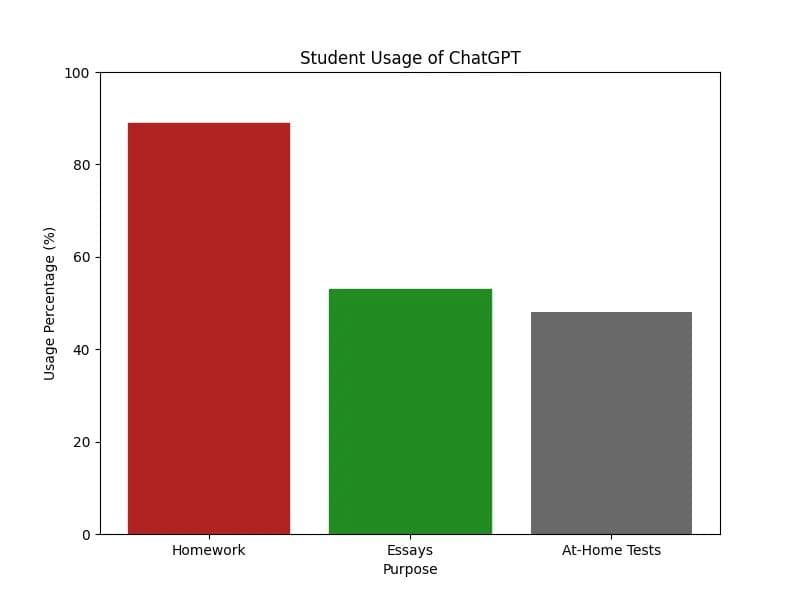

As you can see in this evaltuation measuring the improvements in accuracy across updated GPTs, as the technology improves, AI hallucinations are becoming less prevalent. Student AI usage is so widespread that AI detection has become standard for reviewing every submission. Protect yourself by getting your own AI detector and fact-checking your outputs.

Student AI usage is so widespread that AI detection has become standard for reviewing every submission. Protect yourself by getting your own AI detector and fact-checking your outputs.

Table of Contents

- What is a Large Language Model (LLM)?

- How to Fact Check AI Outputs

- Is Using ChatGPT Considered Cheating?

- Conclusion

- FAQ

What is a Large Language Model (LLM)?

AI Models like OpenAI’s ChatGPT use machine learning based on collecting huge amounts of training data with every writing input and piece of content available to it on the internet. Using these data sets, AI models learn dynamic English so they can produce AI outputs for you on nearly any topic. The writing, however, still carries with it certain negative watermarks that give away its source.The perplexity and burstiness of the word choice and sentence structure are a dead giveaway that a piece of text is AI writing. AI writing is simple and precise, human writing is complex, random, but natural. And most relevantly to this article, artificial intelligence often generates AI hallucinations, completely made up citations and sources that are created to emulate the essays or articles that are in its machine learning. If you are submitting AI writing for anything, in-depth fact-checking is essential if you don’t want the false information in your writing to get you in trouble.

How to Fact Check AI Outputs

The Fact-checking process requires multiple steps to ensure every base is covered and no stone isn’t turned. Here are steps you should take to fact-check AI-generated content remove the inaccuracies of in your article or essay:Research

Verifying every key point in your text is important. Key points include: Names, titles, quotes, dates, statistics, ages, events and the sequence of those events.Be a skeptic, a healthy dose of suspicion is useful when verifying AI writing because often times, many of the claims it can come up with are suspect.Use Multiple Sources

Check the sources your AI is using to ensure the AI citation comes from the original source and that other sources aren’t debunking the AI output. When doing this, make sure you’re only using reliable sources. University standards don’t let students simply use any internet search engines as evidence, you will have to find information in research studies, academic journals or library databases.Fact-Checking Tools

There are many online websites that people use to input their writing and ensure the facts are accurate. One of those websites is factcheck.org, Google also has a fact checker, as well as Microsoft and IBM.Proofread

Lastly, double check your AI writing! Read it again and again to ensure every comma is in the right place and every name is spelled correctly.Is Using ChatGPT Considered Plagiarism?

It’s considered academic misconduct in higher education and will get your content penalized with deranking on search engines if Google catches on to your process. Part of protecting your process is fact-checking, but also using AI Detect to both understand how detectable your AI writing is and to humanize your AI content to make it bypass AI detection systems of any kind.